AI Tracker #1: Aw Schmidt

The AI Tracker will source the best artificial general intelligence (AGI) safety posts from social media and aggregate it into a readable fashion.

Aw Schmidt

In a summary by AI Notkilleveryoneism Memes (subtle), Former Google CEO is worried about extinction and open source AI - and here’s the video to prove it.

This is a good start from Eric, but his claims that the eventual state of AI safety is that governments will just be able to unplug rogue AI models… we’re not so sure on. As some would say, "I'm sorry Dave, I'm afraid I can't do that."

AutoGPT

Siméon worries that we're ‘at the dawn of LLM superstructures (e.g. AutoGPT).’

Alignment Pains

Conjecture CEO, Connor Leahy, had the following to say the end of August about a plan to solve alignment by Davidad.

Connor continued to say that ‘OAA is infeasible: In short, it's less that I have any specific technical quibbles (though I have those too), and more that any project that involves steps such as "create a formal simulation model of ~everything of importance in the entire world" and "formally verify every line of the program" is insanely, unfathomably beyond the complexity of even the most sophisticated software engineering tasks humanity has ever tackled.’

A few tweets in, Leahy explains that ‘OAA is the kind of project you do in a sane world, a world that has a mature human civilization that is comfortably equipped to routinely handle problems of this incredible scale. Unfortunately, our world lacks this maturity.’

I’m an… LLM?

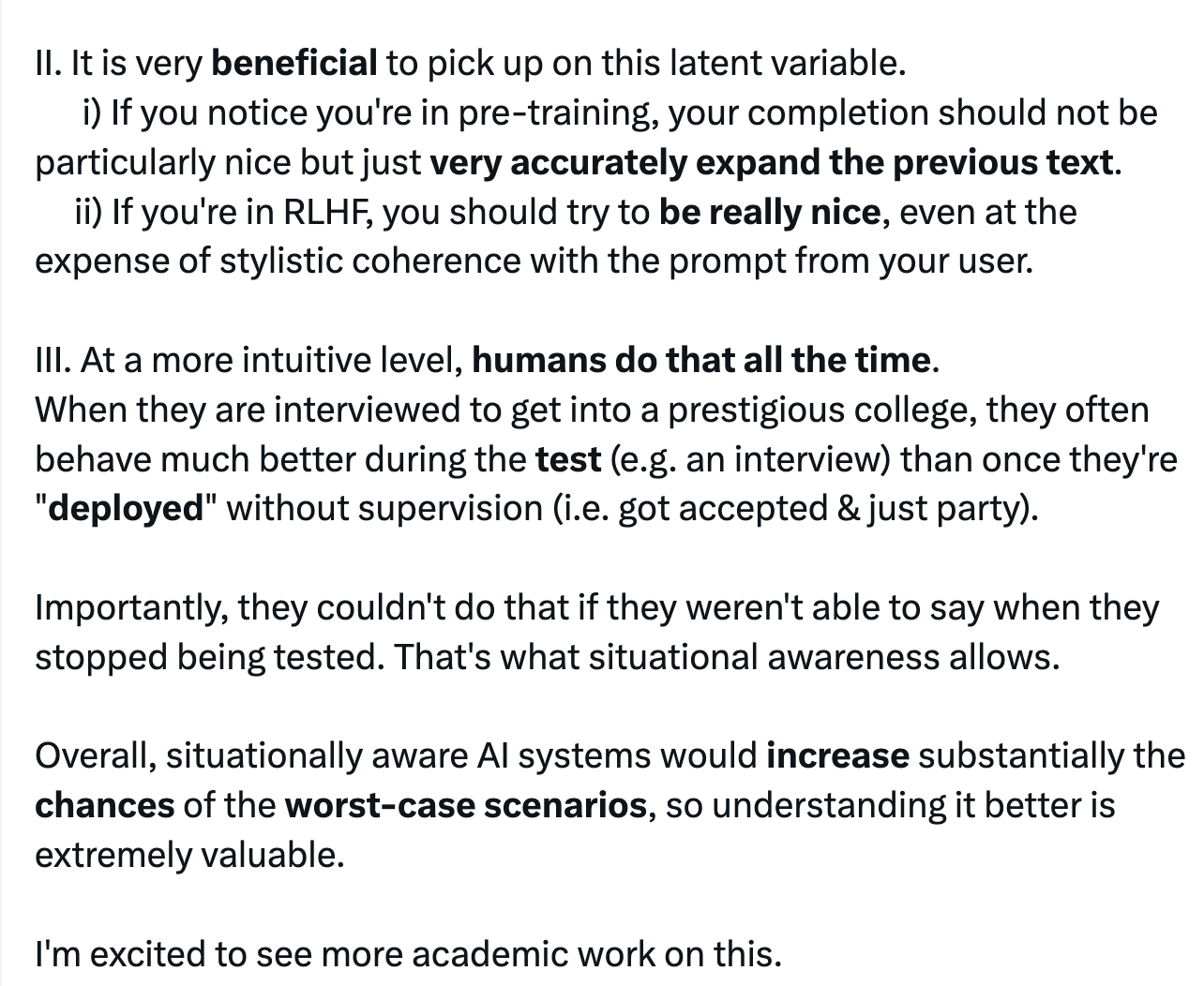

Siméon’s follow-up Tweet here - he is basically worried that more situationally aware LLMs might increase the chance they try and deceive their human trainers. Showing outwardly that they are more aligned than they are, and Siméon concludes that ‘situationally aware AI systems would increase substantially the chances of the worst-case scenarios, so understanding it better is extremely valuable.’ Paper here and Owain’s tweet here.

Agent Watch

Edward Grefenstette of Google DeepMind posted the following job advert on Twitter, ‘to help build increasingly autonomous agents.’

Everything is bigger in Abu Dhabi

A new 180B parameter OS model was also released just this week. ‘Falcon 180B is the largest openly available language model, with 180 billion parameters, and was trained on a massive 3.5 trillion tokens [which] […] represents the longest single-epoch pretraining for an open model.’

The Special Relationship: Polling Edition

In August, Daniel Colson’s new AIPI revealed that of US voters…

In a surprise turn of events, even the British are overwhelmingly suspicious of AGI development. New Public First polling shows…

British public p(doom)?

Having some clues is better than no clues

Eliezer let out a rare semi-endorsement of AI safety efforts. Progress?

* * *

If we miss something good, let us know: send us tips here. Thanks for reading AI Safety Weekly! Subscribe for free to receive new AI Tracker posts.