AI Tracker #13: Tumultuous Trilogue Time

A lot of high-quality AI safety conversation happens on Twitter. Unfortunately, this leads to great write-ups & content being buried in days. We've set up AI Tracker to aggregate this content.

Tumultuous Trilogue Time

European AI leaders are [as of writing] locked into a meeting room, battling it out in Day 2 of their all important Trilogue discussions. On Wednesday they were in there for well over 22 hours, and that was only the first step!

Among other policy issues such as European security forces using AI for facial recognition, the talks also focus on whether or not foundation models should be exempted from the EU AI Act.

As always, Euractiv’s man on the beat Luca Bertuzzi has the lay of the land before anyone else.

AIPI’s Polling Powerhouse

In another supremely interesting set of polling post-OpenAI drama, AIPI’s Daniel Colson splashes in POLITICO and tweets that ‘59% of Americans agree that the events increase the need for government regulation.’

Deepfake Porn Proliferation

A journalist at 404 Media explains how ‘Leaked Slack chats show how OctoML, the company that powers Civitai, thought it was generating images that “could be categorized as child pornography,” but kept working with Civitai anyway.’

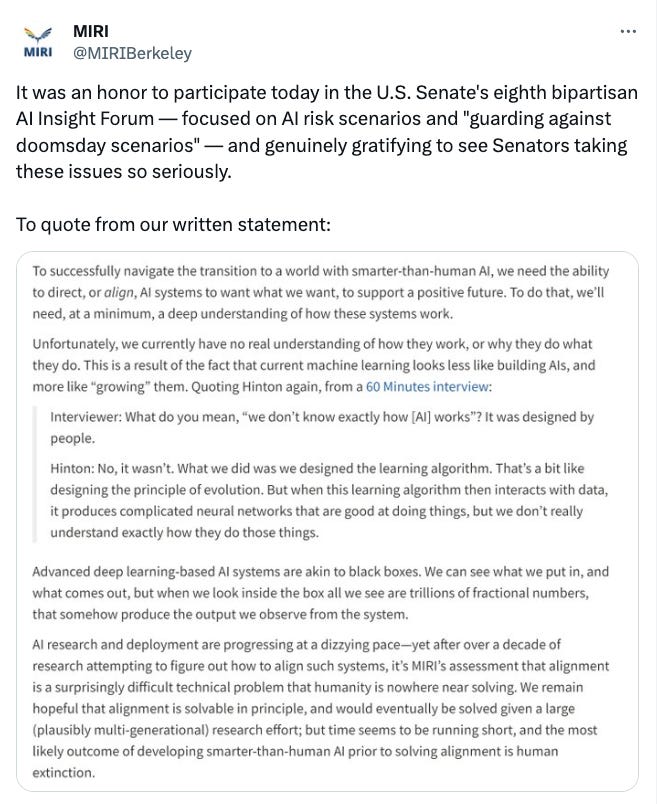

MIRI, Meet Senate

MIRI joined the U.S. Senate's eighth bipartisan AI Insight Forum — focused on AI risk scenarios and "guarding against doomsday scenarios".

MIRI, who attended the event, tweeted that it was ‘genuinely gratifying to see Senators taking these issues so seriously.’

An e/acc, Transhumanist, Physicist, All Walk Into A Room…

Joscha Bach called the Future of Life Institute a ‘ "doomer” organization: they literally aim to stop all AI research (because they are deeply scared that AI will kill everyone).’

FLI’s Max Tegmark asked Joscha: ‘why are you spreading misinformation about FLI (“literally aim to stop all AI research”) when you know that I’m doing AI research?’

And Anders Sandberg wanted to point out that he ‘likely count as a doomer since I think there are good reasons to be very, very careful about AI. Because I want the weird grand creepy posthuman future, not a smoking ruin.’

No punchline, sorry.

Gemini Season

AI Safety Memes gives us their lay of the land in what is the first week of Gemini Season, with on Google’s Gemini release this week.

* * *

If we miss something good, let us know: send us tips here. Thanks for reading AGI Safety Weekly! Subscribe for free to receive new AI Tracker posts every week.